Is AI Safe for Kids? Complete Parent Safety Guide by Age

⚡ Key Takeaways

- AI safety isn't one-size-fits-all - what's appropriate changes dramatically from age 3 to age 13.

- The biggest risk isn't the technology itself, it's unsupervised access and unclear boundaries.

- Start with parent-created content for ages 3-5, guided exploration for 6-8, monitored independence for 9-13.

- COPPA compliance and proper account setup prevent most privacy concerns before they start.

- After 8 months of testing with three kids, we've found AI can be safer than YouTube when used correctly.

Last month, my friend Sarah texted me at 10pm: "My 5-year-old saw me using ChatGPT and wants to try it. Is that safe? What do I even say?"

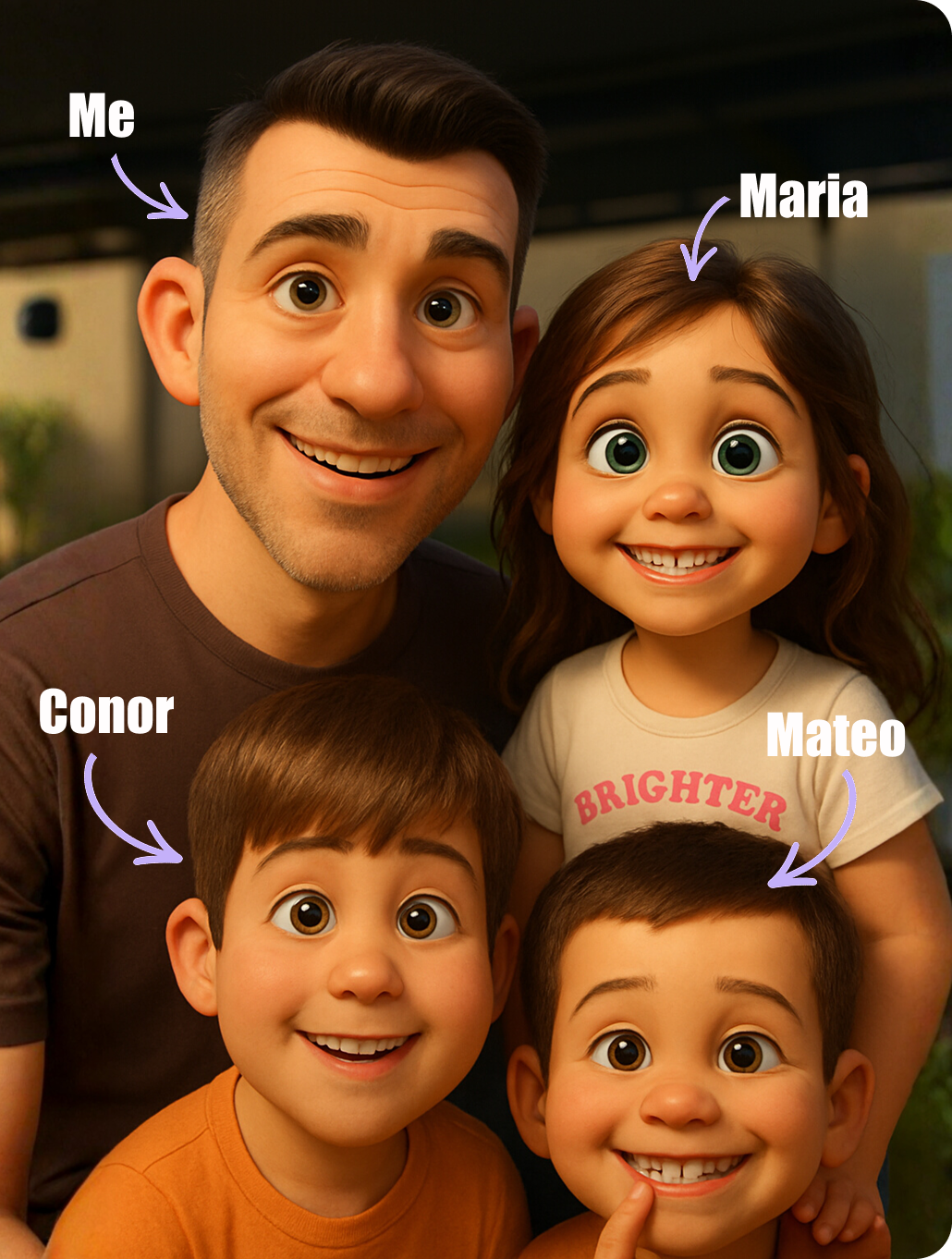

I get this question constantly. And honestly? A year ago, I would've panicked too. But after 8 months of testing AI tools with my own three kids - ages 3, 5, and 8 - I've learned something surprising: AI can actually be safer than the apps we've been letting our kids use for years, if you know how to set it up correctly.

The problem isn't the technology. It's that most AI safety advice treats all kids the same, gives vague warnings about "being careful," and leaves parents more confused than before. What's actually safe for a 3-year-old vs. a 13-year-old? How much supervision do you really need? When should you say no?

In this guide, I'll share exactly what I've learned testing dozens of AI tools with my own children - the specific ages when different tools make sense, the supervision systems that actually work in real life, and the honest mistakes we made so you can skip them. No fear-mongering, no tech jargon. Just real parent insights from someone who's been in the trenches.

Not sure which tool is right for your child?

Take our free 2-minute quiz and get personalized AI tool recommendations based on your child's age and interests.

Why AI Safety Matters (But It's Not What You Think)

When most parents hear "AI safety for kids," they picture scary scenarios: chatbots saying inappropriate things, kids accessing content they shouldn't see, or AI somehow "corrupting" their child's development.

After 8 months of daily AI use with my kids, here's what I've actually encountered: zero inappropriate content, zero scary interactions, and surprisingly few problems overall. But we did face real challenges - just not the ones I expected.

The real risks we experienced:

- My 5-year-old becoming frustrated when AI misunderstood his verbal requests (emotional regulation issue, not safety issue)

- My 8-year-old wanting to use AI for homework shortcuts instead of learning (academic integrity, not content safety)

- All three kids asking to use AI tools constantly once they discovered them (screen time management, not predator danger)

- Trying to set up accounts incorrectly and almost violating COPPA compliance (legal/privacy issue we caught early)

None of these are the dystopian scenarios you read about in news headlines. They're normal parenting challenges that have practical solutions.

💡 Parent Insight: After 8 months of testing, I'm more concerned about my kids' YouTube access than their supervised AI use. At least with AI tools, I control the input prompts and can review every output before they see it.

The Real Question: What's Safe for YOUR Child's Age

Here's what nobody tells you: a tool that's perfectly safe for a 10-year-old can be completely inappropriate for a 4-year-old, even with the exact same parental controls.

It's not about the tool's safety features. It's about developmental readiness.

My 3-year-old doesn't need access to any AI tool directly - but he loves AI-generated content I create for him. My 8-year-old can handle guided exploration of image generation tools with me sitting next to her. The supervision needs are completely different.

Let me break down what I've learned works for each age group, based on real testing with my own kids.

Ages 3-5: Parent-Created Content Only (Zero Direct Access)

At this age, AI isn't something your child uses - it's something you use for your child. They never touch the keyboard, never see the interface, never interact with AI directly.

What's appropriate:

- You create personalized content using AI (stories, songs, images) and share the finished result

- You're present for 100% of the experience

- AI is invisible to them - they just see the story or picture you made

- Session length: 10-15 minutes maximum

Real example from our testing:

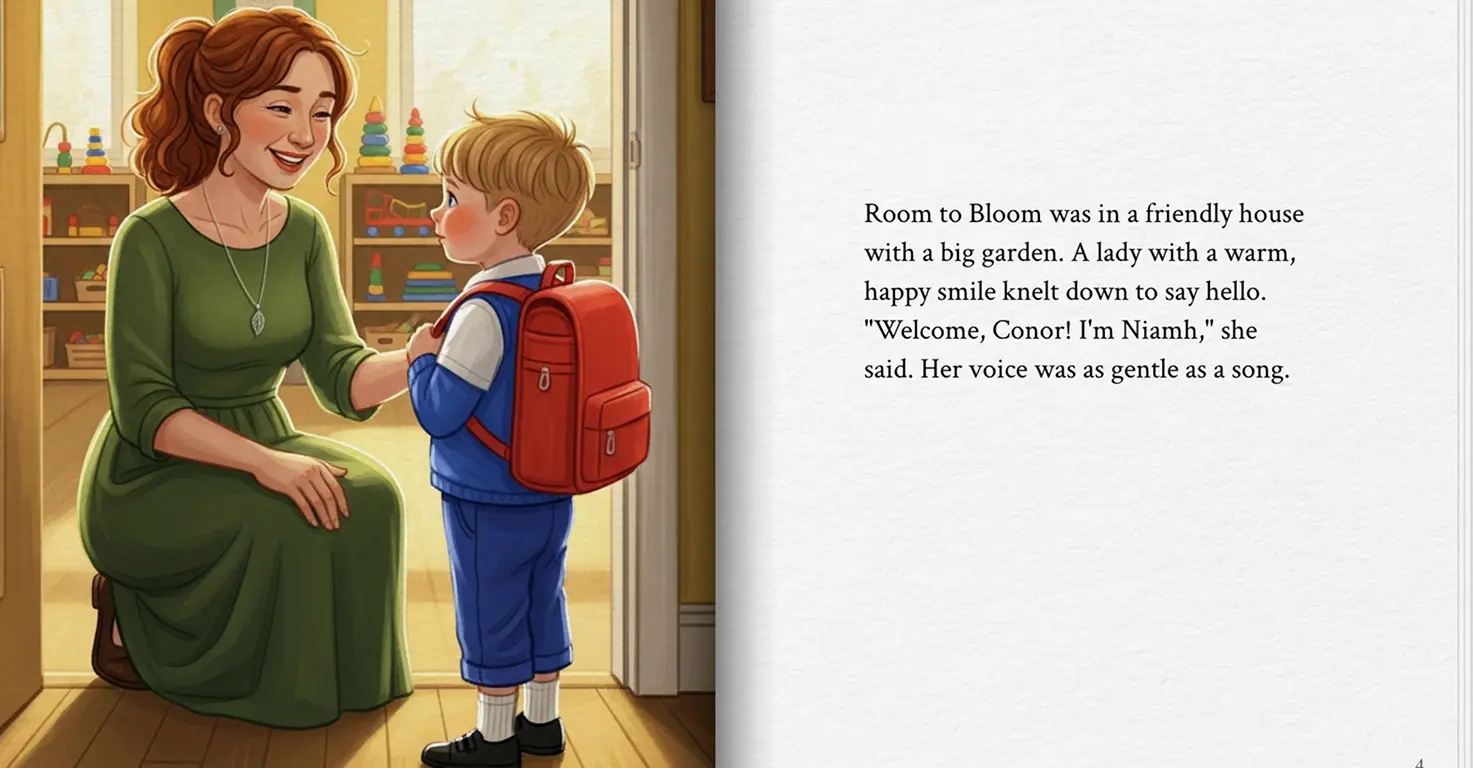

When my 3-year-old was terrified about starting preschool, I used Gemini Storybook to create a personalized story featuring his teacher's photo, his classroom, and his specific fears about drop-off time.

The process took me 20 minutes to create. I uploaded photos, wrote specific prompts about his anxiety ("scared mommy won't come back"), and reviewed every page before showing him. He never saw me creating it - he just got to read a story about a character who looked like him, in his actual classroom, learning that mommy always comes back.

We read that story every night for two weeks. First day of school? He walked in without tears.

Key safety boundaries:

- Child never creates prompts or inputs

- You review 100% of AI output before sharing

- No accounts in child's name (everything is your adult account)

- AI tools are on your devices only, never on tablets they access independently

- Focus on addressing real fears or building skills (letters, numbers, emotional regulation)

🔒 Safety Note: At ages 3-5, the safety question isn't "Is this tool safe?" - it's "Am I creating content that's developmentally appropriate?" You're the filter, not the technology.

What worked: Creating personalized educational content was incredibly effective. My 5-year-old learned his letter sounds faster with AI-generated flashcards featuring his favorite dinosaurs than he ever did with generic alphabet apps.

What didn't work: Trying to let my 5-year-old "help" create prompts. His verbal instructions were too vague ("make it cool!"), which frustrated him when results didn't match his imagination. Better to interview him about what he wants, then create it myself.

Supervision level: FULL - You do everything, they experience the result.

Ages 6-8: Guided Co-Creation (Side-by-Side Only)

This is the age when kids can start participating in the creation process, but only with you physically next to them, controlling the final inputs.

What's appropriate:

- Child describes what they want, you help refine the prompt together

- They watch the AI generate results

- You discuss what worked and what didn't

- They make choices between AI-generated options ("Which style do you like better?")

- Session length: 20-30 minutes, 2-3 times per week maximum

Real example from our testing:

My 8-year-old daughter wanted to create illustrations for a story she wrote about a girl who discovers a magical library. We used image generation tools together (sitting side-by-side at my laptop) over three sessions.

Our process:

- She described what she wanted: "A girl with brown hair in a huge library with floating books"

- I helped refine: "Let's add 'warm lighting' so it feels magical, not scary"

- We reviewed the first result together

- She pointed out what to change: "The books should glow more"

- I adjusted the prompt and regenerated

- After 4-5 iterations, she picked her favorite

The entire project took about 90 minutes across three days. She was incredibly proud of her illustrated story and learned how to give specific feedback to improve AI results.

Key safety boundaries:

- You type all prompts (they dictate, you refine and enter)

- Your adult account only - never logged into child account

- You preview every result before they see it (image generation can occasionally produce weird results)

- Discuss why certain prompts work better than others (teaching critical thinking)

- No solo access - if you leave the room, close the laptop

Tools we've tested successfully at this age:

- Gemini Storybook for creating personalized stories they help design

- Image generation for school projects and creative writing (with heavy supervision)

- Suno for creating silly songs together (their lyrics, AI music)

💡 Parent Insight: The magic age when kids can truly participate is around 7-8 years old. Before that, they get frustrated by the back-and-forth refinement process. Wait until they can handle "that's not quite right, let's try again" without melting down.

What worked: Treating AI like a collaborative art project. My daughter loved the iteration process - seeing how small prompt changes created big differences. It taught patience and specificity in communication.

What didn't work: Trying to use ChatGPT for homework help at this age. The text-heavy interface bored her, and she wanted me to just "ask it to write my book report." That opened a tough conversation about learning vs. cheating that was honestly too abstract for her 8-year-old brain. We shelved ChatGPT until she's older.

Supervision level: HIGH - You're physically present, controlling inputs, reviewing outputs.

Ages 9-13: Monitored Independence (Gradual Autonomy)

This is the trickiest age range because kids are developmentally ready for more independence, but judgment is still forming. They can use some AI tools semi-independently - but only specific tools, with clear rules, and regular check-ins.

What's appropriate:

- Approved tools only (you've researched and tested first)

- Account on your device that you can monitor

- Clear rules about what AI can help with vs. what they must do alone

- Regular conversations about AI limitations and when to verify information

- Weekly reviews of their AI usage together

Real example from our testing:

My 13-year-old nephew used AI tools for a science project about renewable energy, with specific guidelines we established together:

Allowed uses:

- Explaining complex concepts in simpler terms

- Suggesting research questions he hadn't considered

- Checking grammar and clarity in his written report

- Creating comparison charts from data he'd already collected

Not allowed:

- Writing any paragraphs for him

- Generating citations without verification

- Creating content he'd submit as original work

- Using AI late at night without parent awareness

He spent about 2 hours over a week using AI as a research assistant. His project earned an A, and his teacher specifically praised his clear explanations of technical concepts - which he'd learned by asking AI to simplify them first, then writing his own version.

Key safety boundaries:

- You set up the account together and know the password

- Random spot-checks: "Show me what you used AI for this week"

- Explicit discussions about academic integrity

- Rules about topics that are off-limits (anything personal, violent, or sexual)

- They know you can review chat history anytime

- Time limits: 30-45 minutes per day maximum

⚠️ Critical Boundary: At this age, the safety issue shifts from "inappropriate content" to "AI dependency." The real risk is kids using AI to avoid learning, not AI showing them something scary. Set crystal-clear rules about when AI assists vs. replaces their own thinking.

What worked: Framing AI as a "study buddy" rather than a shortcut. When we positioned it as a tool to understand concepts better (so he could explain them himself), my nephew used it responsibly. When we just said "don't cheat," it was too vague.

What didn't work: Assuming he'd self-regulate. We tried giving him full access for a week to "see how it goes." Within three days, he was asking AI to write entire paragraphs. We pulled back, re-established boundaries, and required weekly check-ins. Independence at this age needs scaffolding.

Supervision level: MODERATE - Not physically present, but regular check-ins and spot monitoring.

Universal Safety Checklist (All Ages)

Regardless of your child's age, these non-negotiables apply to any AI tool you consider:

1. COPPA Compliance

The Children's Online Privacy Protection Act requires parental consent for collecting data from kids under 13. Here's what this means practically:

- Under 13: Child should NEVER have an account in their own name. Use your adult account only.

- Ages 13+: Child can have account, but you set it up together and maintain access

- Red flag: Any tool that doesn't clearly state age requirements or asks for child's birthdate without parental verification

We made this mistake early on. I almost set up a "kids account" for my 8-year-old on an AI tool before reading the fine print. It would've violated COPPA and potentially exposed her data. Now I use my account exclusively for anything involving my kids under 13.

2. Account Setup Rules

Our family's non-negotiables for any AI tool:

- Account email: Your adult email, never child's email

- Account name: Generic or parent's name, never child's full real name

- Profile info: Minimal information, never upload child's photo to profile

- Payment: Your credit card only, never let kids access billing

- Password: You know it, you control access

3. Content Filtering Reality Check

Here's the truth about AI content filters: they're pretty good, but not perfect.

After 8 months of testing, I've encountered:

- Zero sexually explicit content (filters work well here)

- Zero violent content when using kid-focused tools

- One time an image generator created a slightly scary-looking character when my daughter asked for "spooky witch" (not traumatizing, just startling)

- Several times AI gave confidently wrong information that could've misled my kids if I hadn't fact-checked

The real risk isn't offensive content - most AI tools are heavily filtered. The real risk is kids believing AI-generated information without verification.

🔒 Safety Rule: Any "fact" AI tells your child needs verification from a second source. We have a house rule: "If AI teaches you something new, show me so we can confirm it's true." Treats AI as a starting point, not an authority.

4. Screen Time Balance

AI tools are incredibly engaging. Too engaging. My kids would use them for hours if I let them.

Our limits by age:

- Ages 3-5: 10-15 minutes, 2-3 times per week (experiencing AI content you created)

- Ages 6-8: 20-30 minutes, 2-3 times per week (co-creating with you)

- Ages 9-13: 30-45 minutes per day maximum (semi-independent use)

These limits are for AI specifically, separate from other screen time. And honestly? We don't always hit them. Some weeks we use AI once, some weeks it's daily. The guideline matters more than perfect consistency.

5. When to Say No

After 8 months of testing, here are the situations where I've said "no" to AI access:

Age-inappropriate tool: My 5-year-old asked to use ChatGPT because he saw his sister using it. No. The text interface is wrong for his age, and he's not ready for open-ended AI conversations.

Wrong motivation: My 8-year-old wanted to use AI to "write my book report so I can play outside faster." Hard no. AI assists learning, doesn't replace it.

Too late at night: AI use in our house ends at 7pm for ages 3-8, 8pm for ages 9-13. Screens before bed are already tough; AI's engagement level makes it worse.

Personal information requests: Any time a tool asks for information about our family, real names beyond first names, photos of my kids' faces (except in closed tools like Gemini Storybook where I control everything), or location data - automatic no.

When I haven't tested it first: My kids ask about new AI tools constantly. My answer is always: "Let me try it this week, then we'll decide together." I never let them be the guinea pig.

What Didn't Work: Our Biggest Mistakes

Let me save you from the errors we made in our first few months of AI testing.

Mistake #1: Assuming "Kid-Friendly" Meant "Safe for All Kids"

I let my 6-year-old try an AI drawing tool marketed as "for kids ages 5+" without testing it first. Within 10 minutes, she was frustrated to tears because the prompts required specificity she didn't have yet ("Do you want watercolor or digital art style?"). The age recommendation was technically accurate, but not for her developmental stage.

Lesson: Test tools yourself first, regardless of marketing claims. Age ranges are guidelines, not guarantees.

Mistake #2: Not Having Clear Rules Before Starting

We dove into AI tools without establishing boundaries first. This led to my 8-year-old assuming she could use AI anytime, asking constantly, and melting down when I said no. We were making up rules on the fly, which felt arbitrary to her.

Lesson: Set rules before introducing any tool. Write them down. Refer to them when kids push back. "Remember, we agreed AI time is twice a week after homework."

Mistake #3: Not Checking In Regularly

When I gave my nephew (13) semi-independent access, I assumed he'd follow our guidelines without regular check-ins. Wrong. After two weeks without reviewing his usage, I discovered he'd been using AI to write entire homework paragraphs.

It wasn't malicious - he genuinely thought "improving my draft" meant "having AI rewrite it." We needed clearer definitions and weekly reviews.

Lesson: Independence requires oversight. Schedule weekly 10-minute check-ins: "Show me what you made with AI this week."

Mistake #4: Over-Explaining Safety Risks

In my first safety talk with my 8-year-old, I gave a 20-minute lecture about data privacy, inappropriate content, and online predators. Her eyes glazed over. She retained nothing.

Better approach: Simple rules, repeated often. "We use AI together." "AI makes mistakes, so we check facts." "If something feels weird, tell me immediately." Short, clear, age-appropriate.

Mistake #5: Treating All AI Tools the Same

I initially lumped all AI tools into one mental category and applied the same rules to everything. But Gemini Storybook (closed system, I create everything) needs different boundaries than ChatGPT (open-ended conversations).

Lesson: Each tool needs individual assessment. Just because one AI tool is safe doesn't mean all are.

Our Family's Safety System (What Actually Works)

After 8 months of trial and error, here's the system that's worked consistently in our house:

Sunday Planning (5 minutes): We decide together which AI projects we'll do that week. Kids request ideas, I approve or suggest modifications. This prevents constant asking and gives them something to look forward to.

During Use: Phone stays nearby for quick photos of anything concerning, laptop screens visible to household (no closed-door AI use), and a clear timer so sessions don't run long.

After Each Session (2-3 minutes): Quick verbal debrief. "What did you create? What was tricky? Did anything surprise you?" This catches issues immediately.

Weekly Review (10 minutes): Sunday evening, we look at the week's AI creations together. Celebrate cool projects, discuss any concerns, plan next week.

Monthly Reset: First Sunday of each month, we review our AI rules together. "What's working? What should we change?" Kids get input, rules evolve as they grow.

💡 Parent Insight: The weekly 10-minute review catches 90% of issues before they become problems. It's the single most valuable part of our safety system. Don't skip it.

The Bottom Line: AI Can Be Safer Than You Think

After 8 months of daily AI use with my kids ages 3, 5, and 8, I'm no longer worried about AI safety the way I was at the start.

Here's what I've learned: AI isn't inherently dangerous. Unsupervised access and unclear boundaries are dangerous. The technology is surprisingly safe when used correctly.

My kids have created hundreds of AI-generated stories, images, and projects. We've had zero scary encounters, zero inappropriate content, and zero situations where I thought "I shouldn't have let them try this."

But we've also had zero unsupervised sessions. That's the key.

Start here, based on your child's age:

Ages 3-5: Try our Gemini Storybook school anxiety project to create a personalized story addressing one of your child's fears. You create it, they experience it. 20-minute time investment, potentially huge emotional benefit.

Ages 6-8: Explore our Best AI Tools for Kids 2026 guide to find age-appropriate creative tools you can use together. Start with one tool, one project, side-by-side. See how your child responds before expanding.

Ages 9-13: Read our Ultimate Guide to AI Tools for Kids for a comprehensive breakdown of tools that work for this age group, then set up one approved tool with clear boundaries. Start with homework assistance (grammar checking, concept explanation) before moving to creative projects.

All ages: Establish your family's safety rules before introducing any AI tool. Use our safety checklist as a starting point, modify for your values and your child's maturity level.

The goal isn't perfect safety - nothing is perfectly safe. The goal is equipping your kids to use powerful technology responsibly, with appropriate supervision for their age, while building critical thinking skills they'll need for a future full of AI.

You've got this. Start small, stay involved, and adjust as you learn what works for your family.

Want weekly AI safety updates, new tool reviews tested with real kids, and project ideas by age? Join 1,000+ parents getting honest insights from someone testing this technology with his own children. I share what works, what doesn't, and exactly how to keep your kids safe while preparing them for an AI-powered future.